Remember back to the Windows XP/Vista days? Life was alot simple from a security perspective. Yeah we got virus and malware, yeah we got spyware and yeah we got malware like we have been used too for the last decade. What did Microsoft have to offer us in terms of protection and security mechanims?

- We got introduced to User Access Control in Vista

- We got introduced to Windows Defender which was a form of Forefront Protection

- We got security updates and such from Windows update

- We got Bitlocker to do drive encryption

- Windows Firewall could filter ingoing and outgoing traffic!

- Drivers needed to be digitally signed!

But of course alot was still up to the third party vendors which delivered their endpoint security solutions (Norman, Symantec, Trend, etc…) Which was there to stop whatever else tried to come in.

So much was introduced into the operating system in especially Vista to try to protect against virus and malware which required elevated user rights (which was the aim of UAC) to try and stop these types of attacks. Now fast forward to 2016, the security landscape has changed, most IT-pros know that in most cases it is not a case of if you get hacked, because in most cases YOU will get hacked! and Microsoft is fully aware of this, and has stepped up their game (Leveled up to lvl 100!)

Because now organized crime is the largest threat and we have different types of Ransomware which can automatically encrypt files and require large amounts of money to decrypt them. These ransomware’s are always evolving, which makes it hard to use signature based detection systems, so it often the case to try and minize the damage.

Another issue is username & passwords, with the large amount of different websites getting hacked each day with people leveraging the same username and password both at work and for personal stuff the use of two-factor authentication is becoming more and more the defacto standard.

And of course in larger enterprises there is always the risk of getting hacked from the “inside” and having security mechanisms which can protect against these types of attacks.

So there have been numerous security enhancements in Windows 10 because Microsoft wants the consumers to have built-in protection instead of the 60-day trial of some “random” third party vendor they get when the buy it from the store.

So what’s new from a security persective in Windows 10?

- Microsoft Passport

- Windows Hello (Which allows for biometric or PIN based two-factor authentication, which makes it more user friendly to get two-factor authentication)

- Credential Guard (Credential Guard aims to protect domain corporate credentials from theft and reuse by malware. With Credential Guard, Windows 10 implemented an architectural change that fundamentally prevents the current forms of the pass-the-hash (PtH) attack.)

- Windows Defender (with Network Inspection System) which is now enabled by default

- Network based start Bitlocker (Allows corporate Computers to boot without typing bitlocker pin in corporate networks)

- SMB signing and mutual authentication (such as Kerberos) to SYSVOL (To migiate against MItM)

- UEFI Secure boot

- Early Launch Antimalware (Which allows certified antimalware solutions to start before malware processes start to run)

- Health Attestation (The device’s firmware logs the boot process, and Windows 10 can send it to a trusted server that can check and assess the device’s health.)

- Device Guard (to only running code that’s signed by trusted signers, as defined by your Code Integrity policy)

- Windows Heap

- Internal data structures that the heap uses are now better protected against memory corruption.

- Heap memory allocations now have randomized locations and sizes, which makes it more difficult for an attacker to predict the location of critical memory to overwrite. Specifically, Windows 10 adds a random offset to the address of a newly allocated heap, which makes the allocation much less predictable.

- Windows 10 uses “guard pages” before and after blocks of memory as tripwires. If an attacker attempts to write past a block of memory (a common technique known as a buffer overflow), the attacker will have to overwrite a guard page. Any attempt to modify a guard page is considered a memory corruption, and Windows 10 responds by instantly terminating the app.

So even all these security features are included in the operating system. What if a disgruntled employee wants to take files outside of the buisness or if files get lost on a USB thumbdrive? There are more features to come!

First thing is Windows Information Protection! (Formerly known as Enterprise Data Protection) which is coming in the next release of Windows 10 ( Windows 10 Anniversary Update.)

This feature will allow for seperation of data between personal and corporate and wherver the device it resides on it can be wiped. Data based upon policies can be encrypted at rest.

And using this will also be visible when saving files to the local system, where corporate content can be stored in specific folders.

Now this feature handles data protection and leak protection of files. But back to ransomware and such, in many cases it is a case of minimizing the threats that occur, and get the overview of what’s happening. Microsoft found that it takes an enterprise more than 200 days to detect a security breach and 80 days to contain it. During this time, attackers can wreak havoc on a corporate network.

Windows Defender Advanced Threat Protection

Enter Windows Defender Advanced Threat Protection! This is a feature which is now in Public Preview, which will be available for Windows 10 enterprise users, which leverages the Windows Defender feature in Windows 10 to do post-breach investigation and it is «not a realtime protection feature” The feature consists of 3 parts:

1. The Client: built into Windows 10 Anniversary Update, that logs detailed security events and behaviors on the endpoint. It’s a fully integrated component of the Windows 10 Operating System.

2. Cloud Security Analytics Service: combines data from endpoints with Microsoft’s broad data optics from over 1 billion Windows devices, 2.5 trillion indexed Web URLs, 600 million online reputation look-ups, and over 1 million suspicious files analyzed to detect anomalous behaviors, adversary techniques and identify similarities to known attacks. The service runs on Microsoft’s scalable Big Data platform, and combines Indicators of Attacks (IOAs), behavioral analytics, and machine learning rules.

3. Microsoft and Community Threat Intelligence: Microsoft’s own Hunters and researchers constantly investigate data, identify new behavioral patterns, and correlate collected data with existing Indicators of Compromises (IOCs) collected from past attacks and the security community.

Since the agent is already “built-in” its a matter of on-boarding the client and getting it up and running. As part of the public preview I have one of my computers added to the solution.

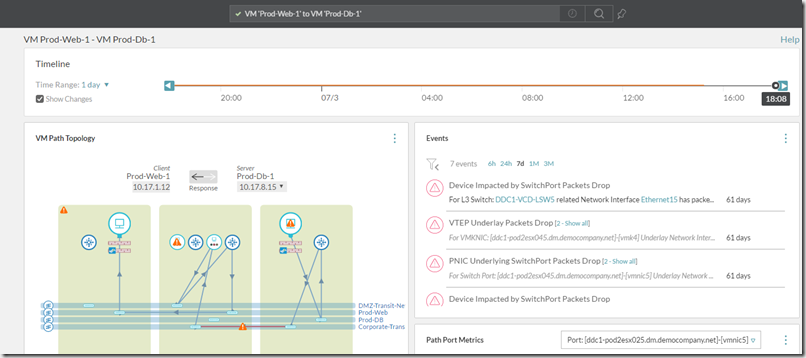

As we can see we have a timeline of different processes and threats that get detected. I did a simple EICAR test, which was automatically removed by Windows Defender but was also added to ATP

I can also do more deep-dive into a specific event to see what happend.

I can also see for instance which IP addresses that has been communicated from the corporate network. For instance if a computer or a group of computers have been communicating with a “known” C&C for botnets for instance

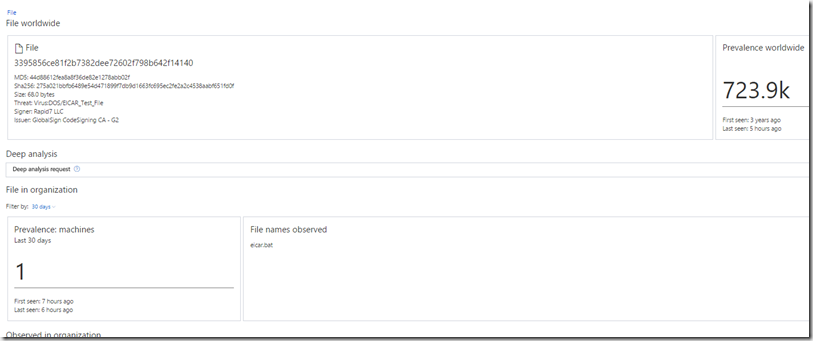

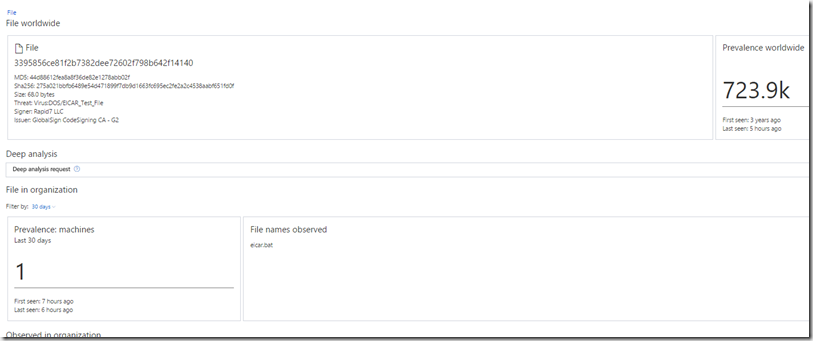

We can also deep-dive into detected malware to see occurences world-wide from Microsoft (Alot of EICAR occureences… )  Also I can see if this has been observed from other agents in the organization.

Also I can see if this has been observed from other agents in the organization.

NOTE: I had some issues with the agent on my laptop since it for some reason only reported back data every 60 minutes, this was because my laptop wasn’t connected to a power source, so in order to reduce battery usage is falled back to that setting. It will do the same on a metered connection. When I connected a power source again I’t went back to sending data every 5 minutes.

I can see this solution as an preview of what’s to come from the ATP, as of now it can give good insight into “what’s happening” and using the timeline, we have a good overview of the history. Given that Microsoft has ALOT of data from billion of devices, both using windows update, defender, system center endpoint protection, and also alot new data will come from Microsoft OMS as well. This will clearly be the stepping stone into more advanced protection features from Microsoft